Normally the docker tool uploads/downloads docker images from docker public registry called Docker Hub. Docker hub lets us upload our images free of cost and anybody can access our images as our images our public. There are ways to configure our own registries from where we can pull docker images.

The benefits of having private registries are:

In this document I am going to write about a very basic registry on Ubuntu 14.04 without any built-in authentication mechanism and without SSL.

I will take two docker nodes server1(IP 192.168.10.75) and server2(192.168.10.76), in the first node server1 I will deploy the docker registry container and from the second node server2, I am going to pull images from our own registry.

Now lets do the handson.

Download the registry image from docker hub.

docker pull registry:latest

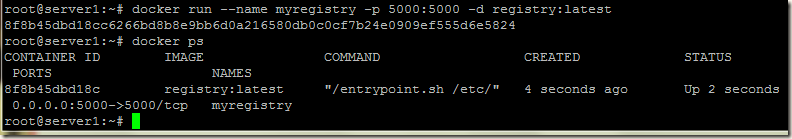

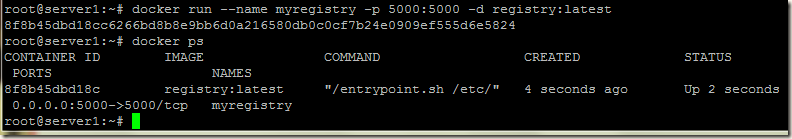

Let’s run the registry docker container, the registry container exposes port 5000 on the node server1, so that docker clients outside the container can use it.

docker run --name myregistry -p 5000:5000 -d registry:latest

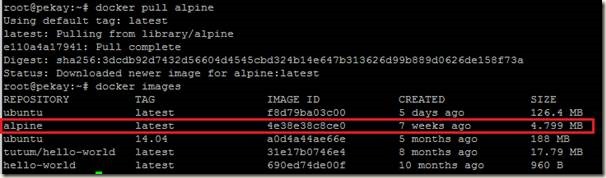

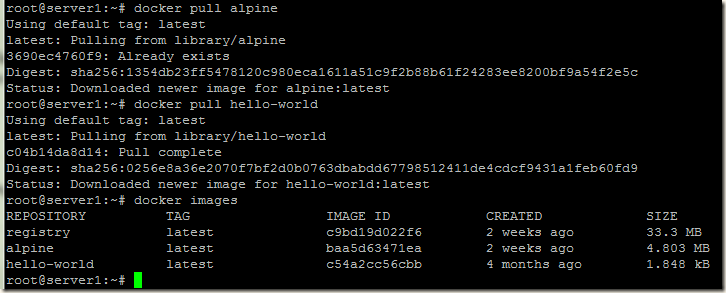

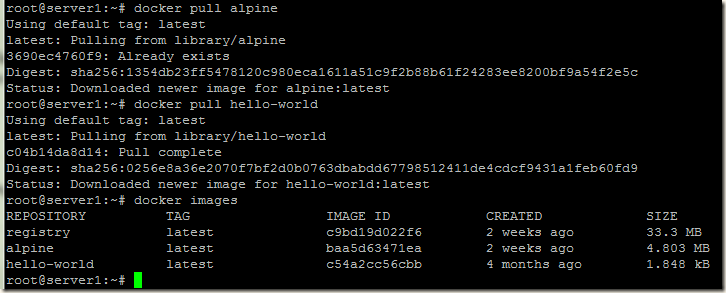

Next we will pull some images from docker hub on to server1, and then we will push these images into our own docker registry (container with name myregistry). For this example we will download alpine and hello-world images from docker hub.

docker pull alpine

docker pull hello-world

Our two images alpine and hello-world are available in server1, so we will push these two images into our registry.

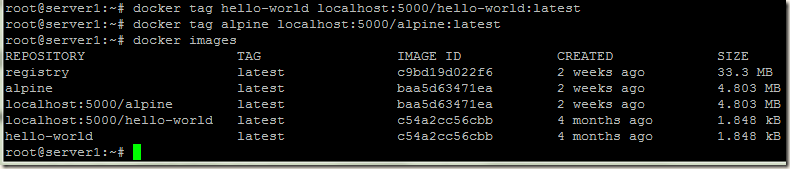

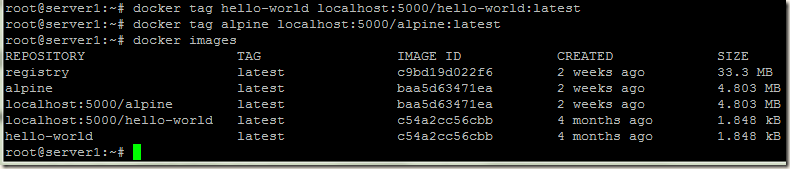

Before pushing the images into our registry, we have to tag the images with the tag of the local registry to which we are going to push these. Without tagging if we try to push an image, we will get image does not exists error.

Use docker tag command to give the image a name that we can use to push the image to our own Docker registry:

docker tag hello-world localhost:5000/hello-world:latest

docker tag alpine localhost:5000/alpine:latest

Now let’s push our alpine and hello-world images into our registry

docker push localhost:5000/hello-world

docker push localhost:5000/alpine

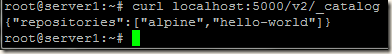

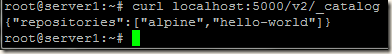

We can check the images available in our registry by running the command:

curl <repository>:<port>/v2/_catalog

curl localhost:5000/v2/_catalog

Our private registry is ready, now we will pull the images from another docker node server2 (IP 192.168.10.76).

When we try to pull the image from our new registry (server1 with IP 192.168.10.75), we get error:

Error response from daemon: Get https://192.168.10.75:5000/v1/_ping: http: server gave HTTP response to HTTPS client

To resolve the error, edit/create the file /etc/docker/daemon.json and add the following line:

{ "insecure-registries":["<registry>:<port>"] }

pico /etc/docker/daemon.json

{ "insecure-registries":["192.168.10.75:5000"] }

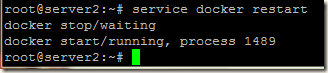

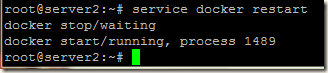

After adding the insecure registry line restart docker process.

service docker restart

When we use the registry on localhost the communication is in plain text and no TLS encryption is needed, but when we connect it from another node it communicates using TLS encryption. So by adding our new registry into the insecure-registries, we informed docker to communicate without TLS encryption.

Now let’s pull our images:

docker pull 192.168.10.75:5000/alpine:latest

docker pull 192.168.10.75:5000/hello-world:latest

Congrats! We have configured our own registry and pushed/pulled images from it successfully .

.

The benefits of having private registries are:

- We can keep our private images as private, so that nobody from outside have access of our private images.

- We can also save time by pushing and pulling images locally from our own WAN/LAN, instead of pushing and pulling over the Internet.

- We can save Internet bandwidth by keeping commonly used images locally in our registry.

In this document I am going to write about a very basic registry on Ubuntu 14.04 without any built-in authentication mechanism and without SSL.

I will take two docker nodes server1(IP 192.168.10.75) and server2(192.168.10.76), in the first node server1 I will deploy the docker registry container and from the second node server2, I am going to pull images from our own registry.

Now lets do the handson.

Download the registry image from docker hub.

docker pull registry:latest

Let’s run the registry docker container, the registry container exposes port 5000 on the node server1, so that docker clients outside the container can use it.

docker run --name myregistry -p 5000:5000 -d registry:latest

Next we will pull some images from docker hub on to server1, and then we will push these images into our own docker registry (container with name myregistry). For this example we will download alpine and hello-world images from docker hub.

docker pull alpine

docker pull hello-world

Our two images alpine and hello-world are available in server1, so we will push these two images into our registry.

Before pushing the images into our registry, we have to tag the images with the tag of the local registry to which we are going to push these. Without tagging if we try to push an image, we will get image does not exists error.

Use docker tag command to give the image a name that we can use to push the image to our own Docker registry:

docker tag hello-world localhost:5000/hello-world:latest

docker tag alpine localhost:5000/alpine:latest

Now let’s push our alpine and hello-world images into our registry

docker push localhost:5000/hello-world

docker push localhost:5000/alpine

We can check the images available in our registry by running the command:

curl <repository>:<port>/v2/_catalog

curl localhost:5000/v2/_catalog

Our private registry is ready, now we will pull the images from another docker node server2 (IP 192.168.10.76).

When we try to pull the image from our new registry (server1 with IP 192.168.10.75), we get error:

Error response from daemon: Get https://192.168.10.75:5000/v1/_ping: http: server gave HTTP response to HTTPS client

To resolve the error, edit/create the file /etc/docker/daemon.json and add the following line:

{ "insecure-registries":["<registry>:<port>"] }

pico /etc/docker/daemon.json

{ "insecure-registries":["192.168.10.75:5000"] }

After adding the insecure registry line restart docker process.

service docker restart

When we use the registry on localhost the communication is in plain text and no TLS encryption is needed, but when we connect it from another node it communicates using TLS encryption. So by adding our new registry into the insecure-registries, we informed docker to communicate without TLS encryption.

Now let’s pull our images:

docker pull 192.168.10.75:5000/alpine:latest

docker pull 192.168.10.75:5000/hello-world:latest

Congrats! We have configured our own registry and pushed/pulled images from it successfully